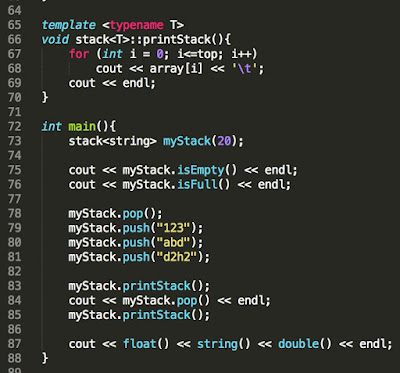

I was asked this question in a Google Interview. I did not know templates then, which is fair because it was not my primary programming language then so the programmer described it to me and I used it fine then. Now, as I am learning about templates, maps and vectors I am attempting to redo the question.

Problem: Imagine there are 2 arrays/lists. Return the relative position mapping of them.

list1 = {"apple", "banana", "orange", "honey"}

list2 = {"banana","apple", "honey", "orange"}

Answer: {1,0,3,2}

Follow-up1: What if this list had repeated items?

Follow-up2: What if the data type of this list was unknown?

1. It is easier to convert the array into a vector because vectors have more inbuilt functions like size.

2. You can not directly send an array to a function and even compute the sizeof operation on the array pointer. For instance if I did:

It prints length 0. Unlike C where sizeof works perfectly in functions also when you pass the pointer to the array.

3. Important to check if the object is found in the map.

4. Using an alternative to iterator over list1 because we need the index as the value in key-value pair of the map.

Follow-up 1:

If I had 2 same objects, the key-value pair will over write the value to the highest index value or the last value that was added. This is because all keys in a map are unique, we can't have 2 keys of same name. Therefore, I converted the value from being a single integer to being a vector of integers which stores all the value occurrences of a particular key.

The interesting thing to note here is it->second is now a pointer to a vector of ints which stores all the index occurrences of a key. After using one index of "orange", we delete that value from this vector of values, so that next time a unique index is returned. Also, it is important to check (line 25) that the vector of values is not empty, else when an object is called for more than it appears in list 1, it will cause segmentation fault. With and without the second condition and the following example:

Without condition:

2 0 1 3 3

segmentation fault

With condition:

2 0 1 3

not found

Follow-up 2:

I used template function to change the function myMap so that it can work with any data type of input including string, int, double, etc..

Output:

20134

24310

1. while defining a iterator to map or vector which has an argument of type T, you need to mention typename in front of it.